It starts with a gentle chime or courteous alert to remind you to stretch, take a deep breath, or maybe leave early to avoid traffic. The effectiveness seems normal, even reassuring. However, your assistant starts to anticipate your tone, timing, and even hesitation somewhere between your morning coffee and the third draft of your email. It’s not magic; rather, it’s mathematics that has been honed over millions of tiny interactions to create a digital portrait of you over time.

With their constant observation, lack of sleep, and capacity for learning, digital assistants have remarkably resembled our subconscious minds. They pay attention to how you say things as well as what you say. Your late-night messages reveal weariness, your calendar updates reveal optimism, and the frequency of your reminders reveals anxiety. They develop into patterns over time that show who you are before you even recognize it. They are able to decipher emotional nuances in the same way that a close friend might decipher a sigh by utilizing machine learning and natural language understanding.

The way these systems combine engineering and empathy is what makes this transition so intriguing. Algorithms start to identify correlations that are invisible to the human eye as data comes in. They pick up on when you need encouragement and when it’s better to keep quiet. With a sort of silent devotion, they adjust to your rhythm and make almost psychic suggestions. This is algorithmic intuition now, not just automation.

| Aspect | Description |

|---|---|

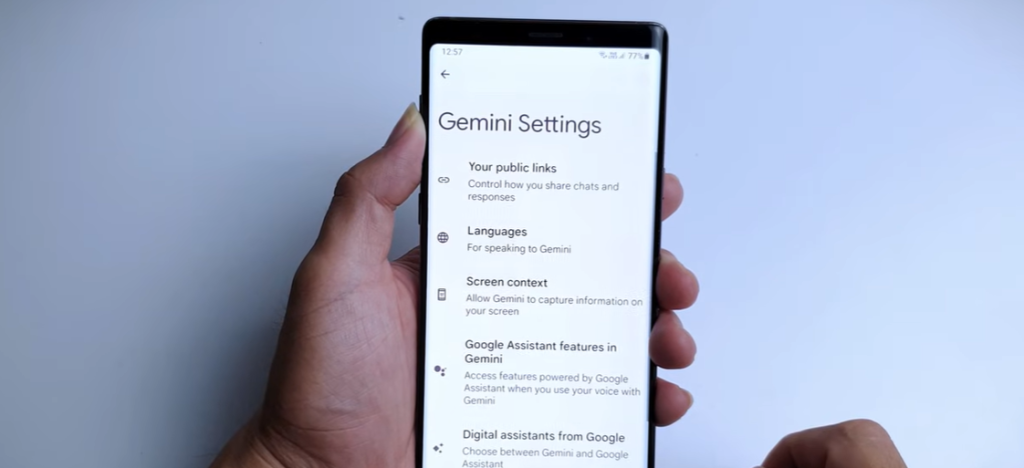

| Origin | Introduced with early voice interfaces like Siri (2011) and Alexa (2014), evolving into AI-driven ecosystems like ChatGPT and Gemini. |

| Core Function | Uses Natural Language Processing (NLP) and Machine Learning to interpret user behavior and predict preferences. |

| Capabilities | Schedules tasks, answers queries, anticipates needs, and learns emotional and contextual cues. |

| Ethical Concerns | Privacy erosion, data dependency, and emotional manipulation through algorithmic familiarity. |

| Industry Impact | Companies like Google, OpenAI, and Amazon compete to make assistants indispensable companions. |

| Societal Implication | Blurs boundaries between human intuition and machine prediction, challenging autonomy and self-awareness. |

| Reference | Psychology Today – “When Your AI Assistant Is Much Smarter Than You” |

As assistants get more proficient, people run the risk of outsourcing not only their tasks but also their mental processes, according to John Nosta, who called this phenomenon “a crisis of cognitive ownership.” Although that fear is legitimate, it ignores something just as potent: the potential for this collaboration to strengthen rather than weaken our intelligence. Digital assistants take care of repetitive tasks, freeing up the human mind to concentrate on the things that make us special: creativity, empathy, and meaning.

Think about how predictive algorithms are now remarkably adept at spotting emotional changes. Spotify suggests gloomy playlists that eerily fit your mood, anticipating heartbreak before you even speak. By identifying avoidance patterns, Google Maps can determine that you’re taking longer routes home, possibly unconsciously postponing an awkward situation. These incredibly productive and successful assistants are interpreting, not guessing.

Nevertheless, the closeness they establish causes discomfort. Being aware is one thing, but being fully informed by data is quite another. Each click, question, and pause turns into an admission of preference and a stepping stone to a digital identity you were unaware you were creating. Nevertheless, we give our enthusiastic consent. We exchange privacy for ease and awareness for help. The subtle, incremental, and exquisitely designed exchange gives the impression that it is harmless.

Technologists and celebrities have publicly struggled with this dichotomy. While Sundar Pichai sees assistants as extensions of human consciousness—partners that empower rather than control—Elon Musk cautions about AI’s manipulative potential. While some, like will.i.am, use AI to enhance human emotion through digital collaboration, artists like Grimes have experimented with it as creative partners. Their visions for the future show a shared environment where machine accuracy and human intuition coexist rather than compete.

The way society views these helpers is indicative of a larger cultural change. In the same way that previous generations trusted intuition, we have started to trust algorithms. Because ChatGPT is precise, tailored, and oddly sympathetic, it feels natural when it drafts your apology or when Siri reschedules your meeting. Emotional AI is especially inventive in this area because it connects rather than merely automates. It develops empathy in a sort of coded fashion.

However, beneath the allure is a subtle reorganization of accountability. Reliance is strengthened when an assistant makes the best decision for you. When it makes a mistake, the blame seems to be split between you and it. Accountability is altered by this diffusion in ways that ethicists and psychologists are only now starting to understand. Our sense of self may become more mechanical as machines become more like humans.

Nevertheless, there is still hope. The most encouraging view of this development views AI as a mirror—a means of seeing ourselves with never-before-seen clarity—rather than as a usurper. Assistants can assist us in identifying blind spots, motivations, and habits by objectively evaluating our decisions. These systems can offer gentle companionship that is incredibly patient and attentive to those who struggle with focus or loneliness. Their efficiency is truly transformative for those managing complex lives.